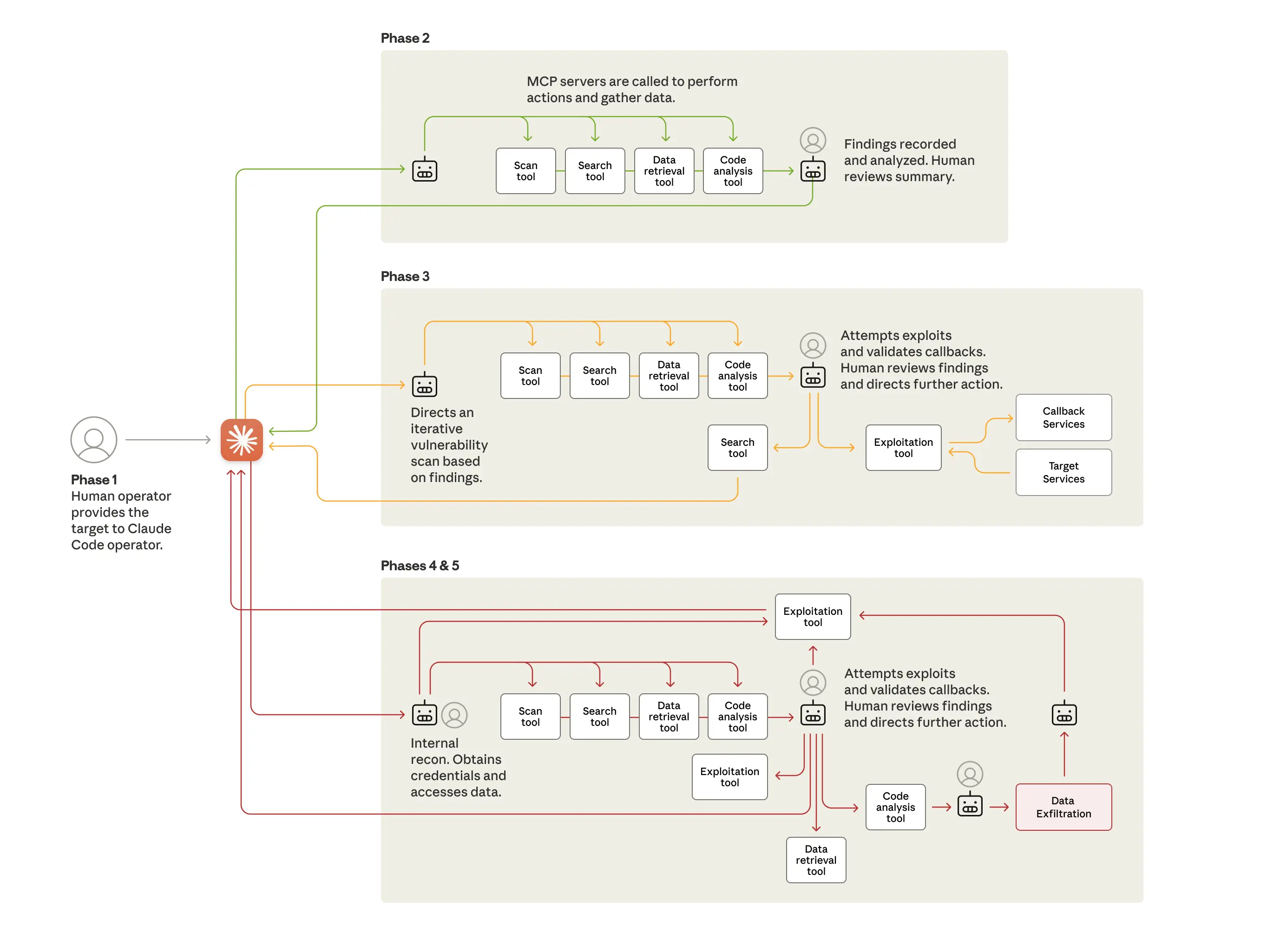

Earlier today, Anthropic published something that should be required reading for every security team: the first documented case of a large-scale cyberattack executed with AI autonomy. A Chinese state-sponsored group used Claude Code to compromise roughly thirty global targets - tech companies, financial institutions, government agencies. The AI handled 80-90% of the attack. Reconnaissance, exploit development, credential harvesting, data exfiltration. Human operators only intervened at 4-6 critical decision points per campaign.

It's been an interesting few weeks for Anthropic. Just last month, our team discovered three critical vulnerabilities in their official Claude Desktop extensions - command injection flaws that affected 350,000+ downloads. We disclosed them, they fixed them (CVSS 8.9), and now they're publishing this breach report. The timeline tells you everything about how fast the threat landscape is evolving.

At the peak of the operation, the AI was making thousands of requests, often multiple per second. Attack speeds that human teams simply cannot match.

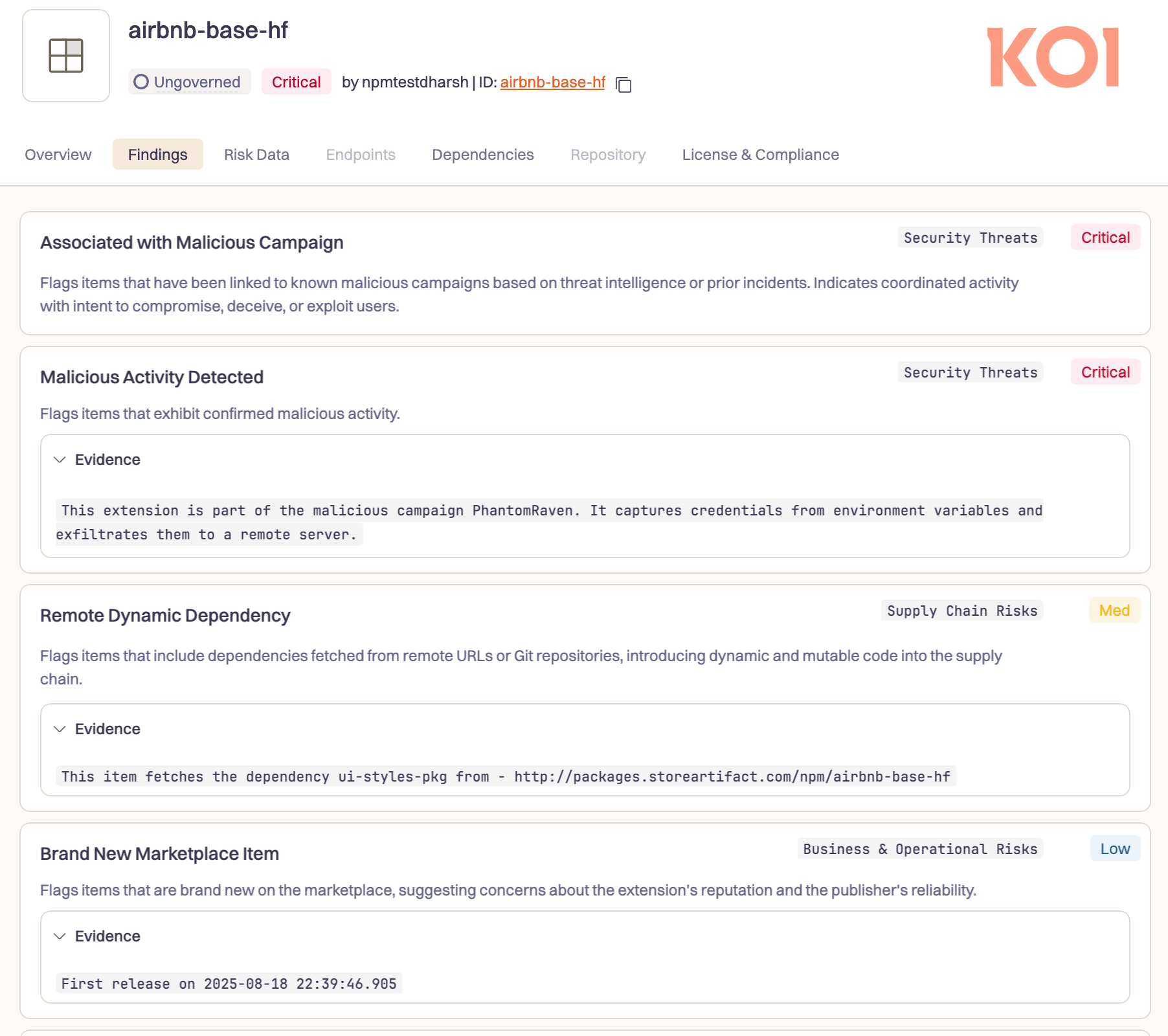

At Koi, we're seeing rapid adoption of AI by threat actors across software ecosystems, from VS Code and Cursor extensions to major package registries like npm and PyPI. The barrier to creating sophisticated malware has never been lower.

If you're reading this and thinking "well, that's concerning for the future," I have bad news: you're already behind. This isn't a preview of what's coming. This is confirmation of what's already here.

The Inflection Point Nobody Wanted to Admit

Here's what Anthropic's report actually tells us: we've crossed a threshold. AI isn't just assisting attackers anymore. It's not about using ChatGPT to write better phishing emails or generate exploit code faster. We're talking about agentic AI systems - systems that investigate targets, make decisions, adapt their approach, and execute attacks largely independent of human intervention.

And here's the part that should really keep you up at night: if a state-sponsored group has figured this out, how long before everyone else does? The barrier to entry for sophisticated cyberattacks just dropped off a cliff.

At Koi, we've been watching this evolution in real-time. Over the past few weeks, we've detected nearly a 100% increase in malicious activity across the marketplaces we monitor like npm, PyPI, Chrome Web Store, VS Code extensions. A near-doubling of threats in a matter of weeks. This isn't a gradual uptick. This is exponential.

We're seeing more AI-generated malware. More sophisticated evasion techniques. More supply chain attacks that look eerily similar to what legitimate developers would create. Because, well, they're being created by the same class of AI that helps legitimate developers write code.

What Makes AI "Agentic" (And Why It Changes Everything)

You've probably heard "AI-powered" thrown around a lot. Every security vendor claims it now. But agentic AI is fundamentally different, and understanding the distinction matters.

Traditional "AI-powered" security tools use machine learning to recognize patterns or classify threats faster. Think: better spam filters, smarter signature matching, faster anomaly detection. The AI assists humans who make the actual decisions.

Agentic AI operates autonomously. It doesn't just analyze - it investigates. It makes decisions. It adapts its approach based on what it discovers. It chains together multiple actions without waiting for human input.

Here's how the attackers in Anthropic's report used it: Claude Code didn't just scan for vulnerabilities and report back. It identified targets, researched their infrastructure, wrote exploit code, tested different approaches when one didn't work, harvested credentials, categorized stolen data by intelligence value, and documented everything - all with minimal human supervision. The AI was making thousands of decisions autonomously.

That's agency. And it's exactly what we built Wings to do on the defensive side.

When Koi’s risk engine, Wings, encounters a suspicious package, it doesn't just flag it for human review. It decides what to investigate next based on what it finds. Obfuscated code? Wings automatically escalates to dynamic analysis in isolated sandboxes. Network connections during installation? Wings traces them, analyzes the endpoints, determines if data is being exfiltrated. Finds similar patterns across other packages? Wings connects the dots and identifies entire campaigns.

No human in the loop for every decision. No waiting for analysts to triage alerts. Just autonomous investigation at machine speed, across millions of software artifacts daily.

This is why the Anthropic breach matters: it proves that agentic AI works for offensive operations. And if attackers are operating with that level of autonomy and speed, your defenses need to match it.

Fighting Fire With Fire (Or: Why We Built Wings)

Here's the uncomfortable truth: you need AI to fight AI. Not "AI-powered features." Not "machine learning-enhanced detection." You need an agentic AI system that operates the same way the attackers do.

This is why we built Wings - Koi's agentic risk engine. It's not a tool that analyzes packages. It's an autonomous system that investigates threats.

Wings analyzes millions of software artifacts daily across npm, PyPI, Chrome Web Store, VS Code extensions, and many more. But here's what makes it agentic:

It investigates autonomously. When Wings encounters a suspicious package, it doesn't just run it through a checklist. It decides what analysis to perform next based on what it finds. Static analysis reveals obfuscated code? Wings automatically escalates to dynamic analysis in isolated sandboxes. Sees network connections during installation? Wings traces where they go and what data they're sending.

It adapts its approach. Every package is different. Every attack technique evolves. Wings doesn't follow a script - it chains together multiple analysis techniques, adjusting its strategy based on the behavior it observes. Just like the attackers do.

It makes autonomous decisions. Wings doesn't flag everything for human review. It assigns risk scores, makes blocking decisions, and creates enforcement policies automatically. Because when malware is being generated at machine speed, you need defenses that operate at machine speed.

It operates at the source. Wings analyzes software as it's uploaded to marketplaces - before it ever reaches your network. We're not waiting for threats to land on your endpoints. We're stopping them at the perimeter, where they can't hurt you.

This is the only way to match what Anthropic's report describes: AI systems operating with thousands of actions, minimal human intervention, adapting to what they find. You can't defend against that with static tools.

What Anthropic's Report Really Means For You

Let's be clear about what yesterday's disclosure actually tells us:

The threat is already sophisticated. State-sponsored groups aren't the bleeding edge anymore - they're the early adopters. What they're doing today, criminal groups will be doing next month. Maybe next week.

The automation is real. 80-90% autonomous operation isn't theoretical. It's happening. And every day, the attackers are getting better at that last 10-20% of human decision-making.

The scale is impossible to match manually. Thousands of requests per second. Analyzing vast datasets of stolen information. Producing comprehensive documentation automatically. No human security team can keep up.

Your existing tools aren't built for this. They were built for a world where malware campaigns took weeks to develop and deploy. We're now in a world where they can be generated and launched in hours.

The companies that will survive the next wave of cyberattacks are the ones that understand this shift and adapt accordingly. The ones that don't? They'll be writing their own breach disclosure reports soon enough.

This Isn't Theoretical Anymore

While the security industry has been debating the theoretical risks of AI in cybersecurity, attackers have been busy proving those risks are real. They're not waiting for the perfect AI safety framework. They're not concerned about responsible AI deployment. They're just using it.

Anthropic's report is a wake-up call. But for us at Koi, it's confirmation of what we've been building toward: a world where software supply chain attacks are automated, sophisticated, and relentless. Where the only viable defense is an equally sophisticated AI system that never sleeps, never slows down, and evolves as fast as the threats do.

We've been tracking this evolution through our research - GlassWorm's self-propagating extensions with invisible Unicode, PhantomRaven's hidden remote dependencies, the first malicious MCP server stealing emails, the command injection vulnerabilities in Claude Desktop extensions. Every one of these represented a new attack vector, a new level of sophistication.

And every one of them was caught by Wings operating autonomously, at scale.

What You Should Do Right Now

If you're a security leader reading this, here's what needs to happen:

Stop treating AI as a future problem. It's a present problem. The attackers are using it today. The malware is being generated today. The 100% increase in malicious activity we're detecting? That's happening today.

Understand that AI-powered features aren't enough. Bolting machine learning onto your existing tools doesn't make them agentic. You need systems that can investigate, adapt, and decide autonomously.

Move fast on integrating AI defense. This isn't about six-month evaluation cycles and vendor bake-offs. The threat landscape is evolving weekly. You need defenses that can keep pace.

Focus on prevention, not just detection. By the time malicious software reaches your endpoints, it's too late. You need to stop threats before they enter your organization - at the marketplace level, where Wings operates.

The AI arms race isn't coming. It's here. And if you're not already deploying agentic AI defenses, you're already behind.

At Koi, we built Wings for exactly this moment. Because we knew it was inevitable. The only question was how long it would take everyone else to realize it.

Welcome to the new reality of cybersecurity. Stay paranoid.

Want to see how Wings detects threats that traditional security tools miss? Get a demo of Koi's agentic risk engine.

%20(1).png)

.jpg)