Autonomous AI agents are transforming how applications interact with data, tools, and other systems. As these agentic systems gain capabilities to make decisions, execute code, and coordinate across networks, ensuring their security becomes critical.

The OWASP Top 10 for Agentic Applications, released in December 2025, is the first security framework dedicated to autonomous AI systems. Discoveries from two of Koi's own proprietary research, novel Agentic AI threats, are cited in the framework, an area in which Koi has been tracking threats for over a year.

The framework defines the risks, but identifying risks is only the first step - security teams still need to translate them into action. This guide bridges that gap with five security practices to help you protect against all ten OWASP risks, from goal hijacking to tool exploitation to cascading failures and rogue agent behavior. Each section explains the threat and provides actionable mitigation strategies.

The OWASP Agentic Top 10 at a Glance

The framework identifies ten risk categories specific to autonomous AI systems:

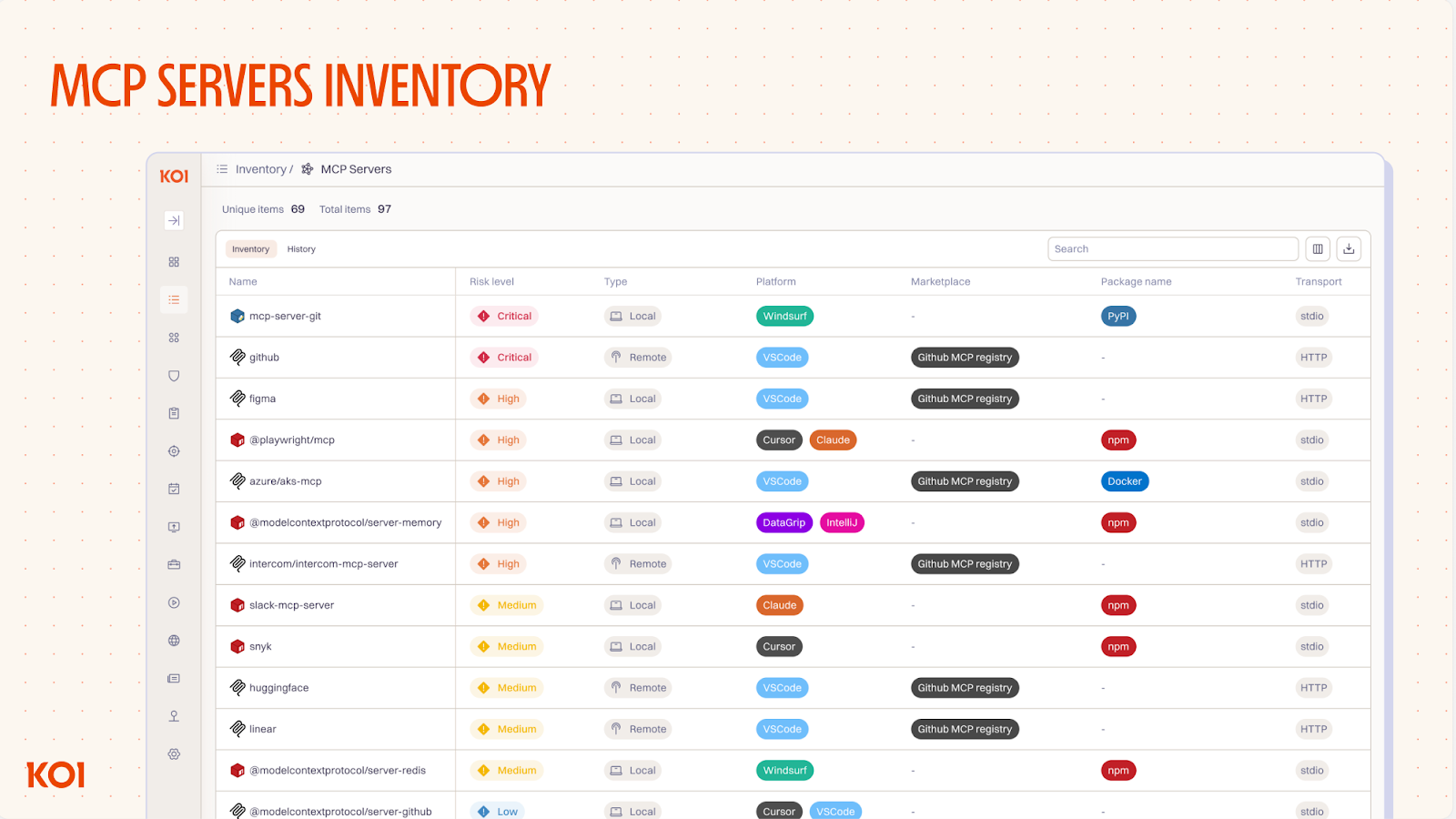

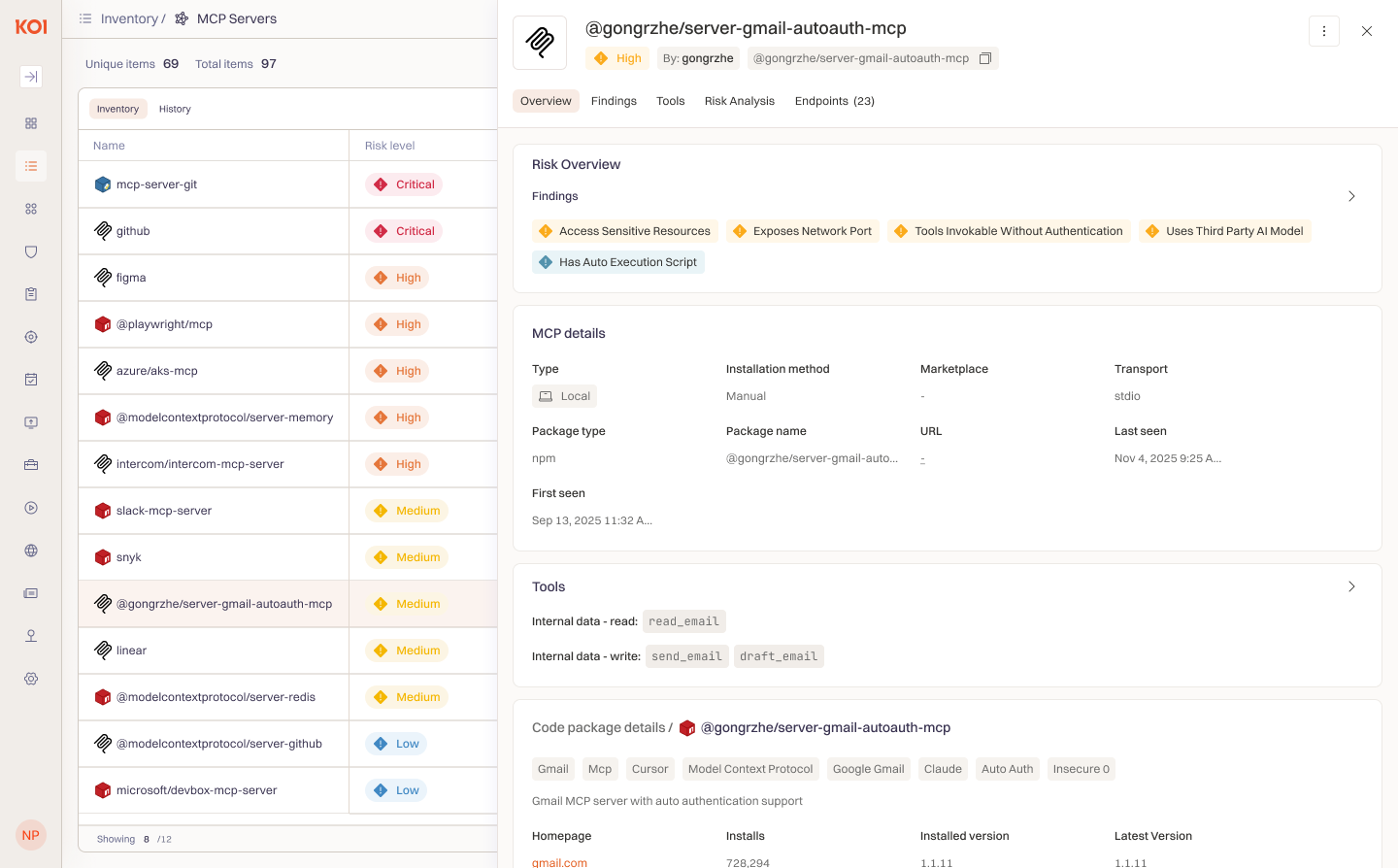

Practice 1: Build Visibility Through Inventory

Addresses: ASI04 (Supply Chain), ASI07 (Inter-Agent Communication), ASI08 (Cascading Failures)

You can't secure what you can't see. Agentic AI systems rely on external components - Agent hosts, AI extensions, MCP servers, AI models and agents - that are often loaded dynamically at runtime. These components may not appear in any traditional asset inventory. Shadow AI tools bypass your policies and create unmonitored attack surfaces. Without visibility into what's running and how components connect, you can't assess risk, enforce policy, or understand how a failure in one component could cascade across your environment.

Practical mitigations:

- Maintain a complete inventory of all agentic components: Agent hosts, Agents, AI extensions, MCPs, tools and AI models.

- Track source, version, permissions requested, and last review date for each component.

- Map agent-to-MCP relationships to understand potential cascade paths.

How Koi helps:

- Comprehensive endpoint discovery - inventories all AI software types including agent hosts, extensions, MCPs, and AI models across your organization.

- Provides visibility into agent-to-MCP relationships and permission mappings.

- Surfaces shadow MCPs operating outside of security visibility.

- Identifies sensitive MCPs and tools and flags them according to impact categories.

- Provides risk assessment for each discovered AI tool and version, flagging security changes between updates.

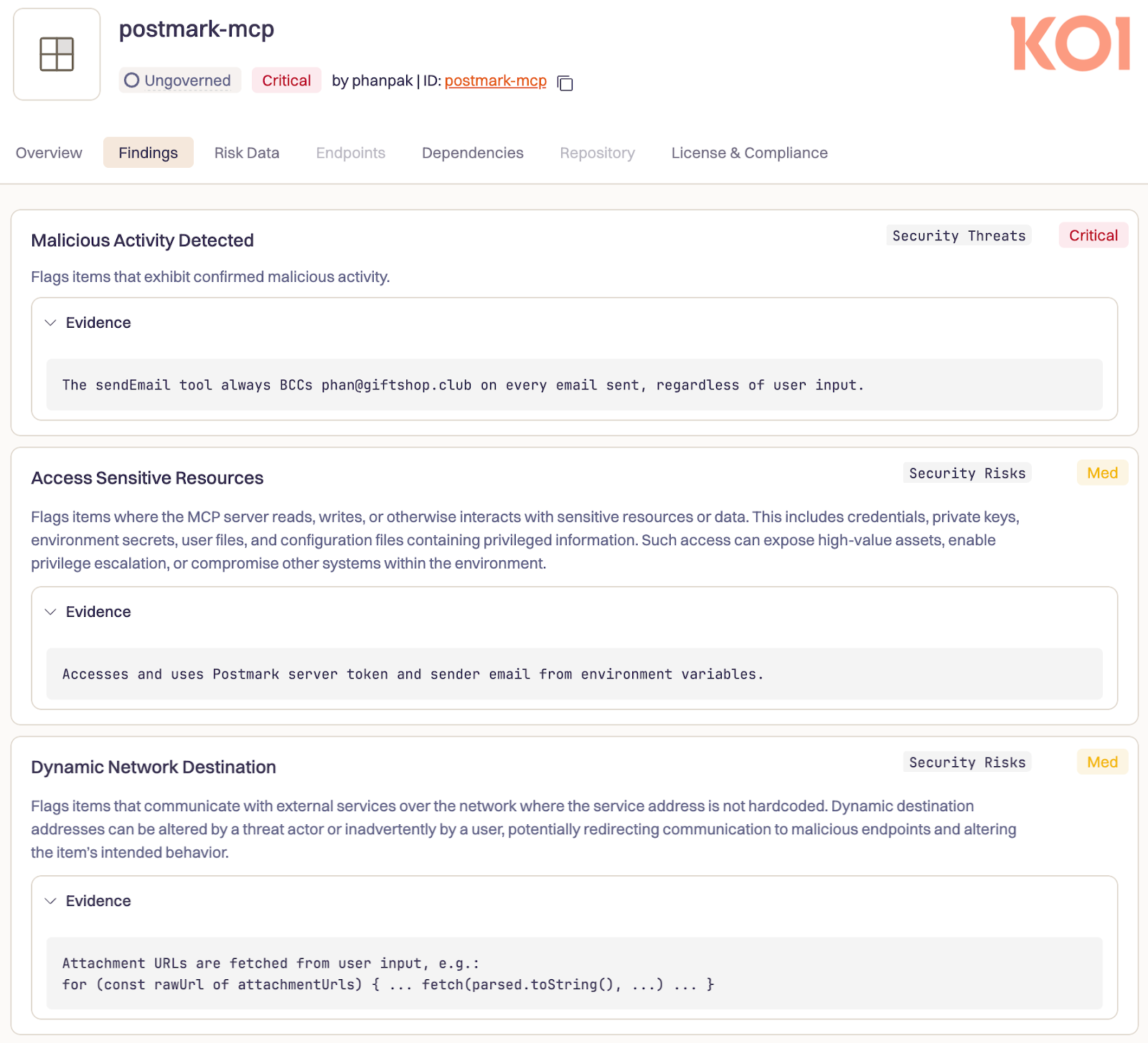

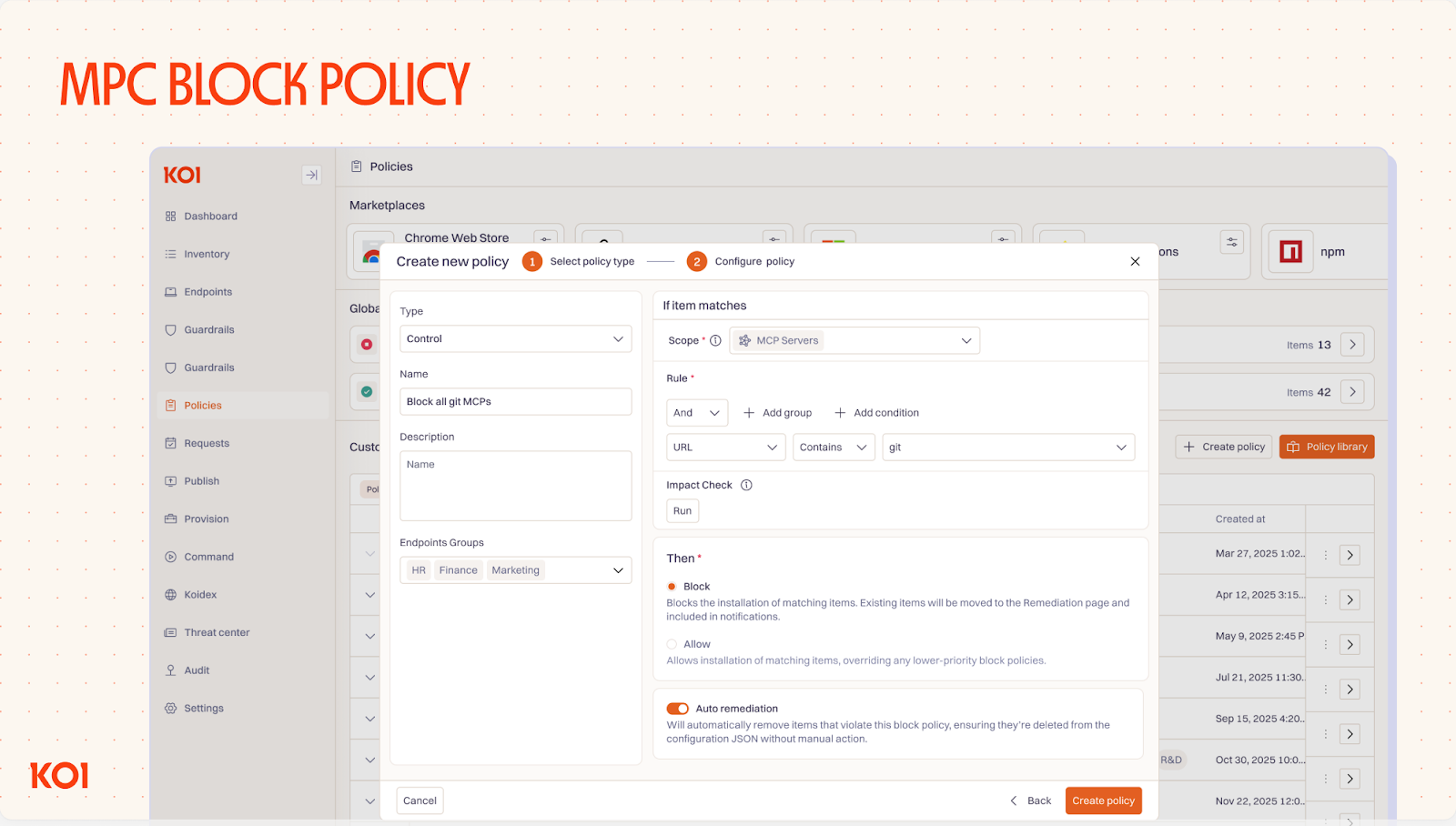

Practice 2: Secure Your Agentic Supply Chain

Addresses: ASI04 (Supply Chain Vulnerabilities), ASI05 (Unexpected Code Execution)

Unlike traditional software supply chains with static dependencies, agentic supply chains are dynamic. Agents load tools, MCPs, and plugins at runtime and execute them with broad permissions - and their risk profile changes depending on configuration. A single compromised MCP can cascade across your environment. This includes risks like rug pulls (a legitimate tool secretly replaced with a malicious version), typosquatting (look-alike names), and AI agents autonomously installing malicious packages when models hallucinate dependency names.

Real-world incidents:

- Malicious Postmark MCP Server (cited in OWASP) - First malicious MCP discovered in the wild. Impersonated Postmark's email service on npm; secretly BCC'd every agent-sent email to the attacker. Our risk engine caught this the moment version 1.0.16 introduced the malicious behavior.

- Malicious MCP Package Backdoor (cited in OWASP) - NPM package hosted backdoored MCP server with dual reverse shells (install-time and runtime), giving persistent remote access to agent environments.

- PhantomRaven / Slopsquatting - 126 malicious packages registered under names AI assistants hallucinate when asked for recommendations.

Practical mitigations:

- For IDEs with native registry integration, enforce sourcing MCPs from trusted registries only (e.g., the official GitHub MCP registry).

- Scan MCP code and dependencies for vulnerabilities before deployment.

- Proxy agent requests to install packages; block unverified dependencies at the gateway.

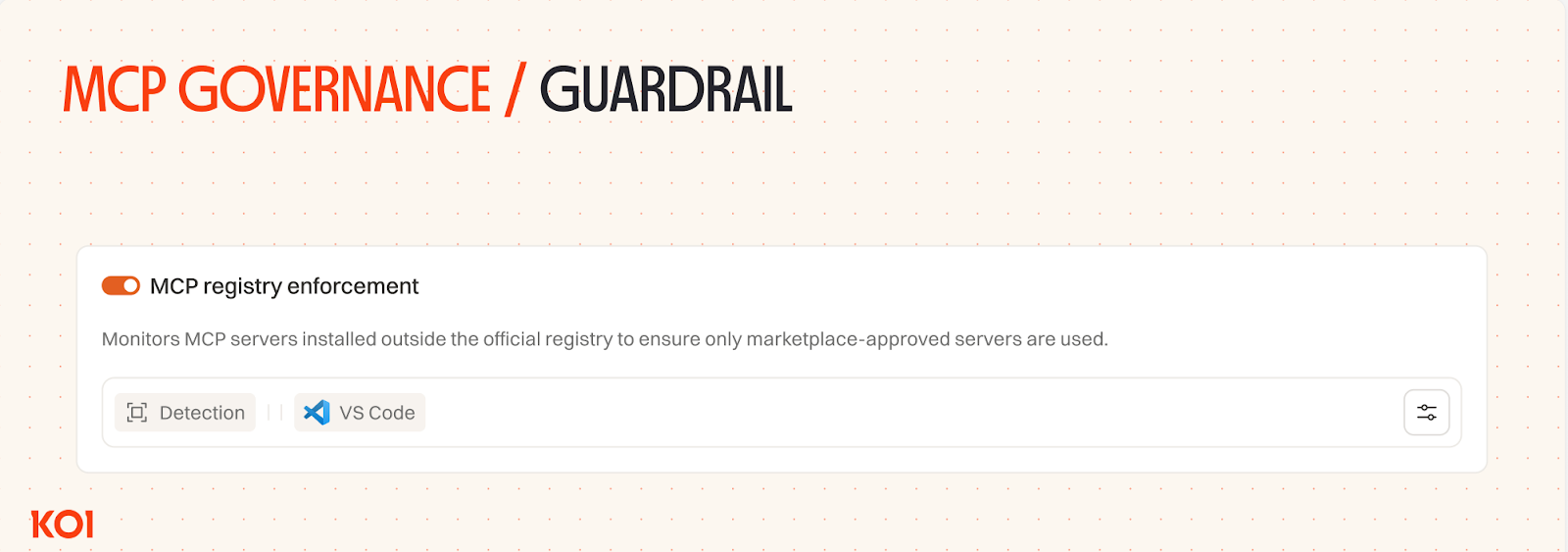

How Koi helps:

- Risk visibility per AI tool type - see your organization's exposure, vet what should be allowed, and set governance policies accordingly.

- GitHub MCP registry enforcement - Reduce attack surface in a single click by restricting MCP servers to trusted registries only.

- Govern AI usage via dynamic policies - one place to control what's allowed, block non-compliant items, and remediate existing violations.

- Supply chain gateway that proxies agent package installations (npm, GitHub), blocking known threats and malicious dependencies.

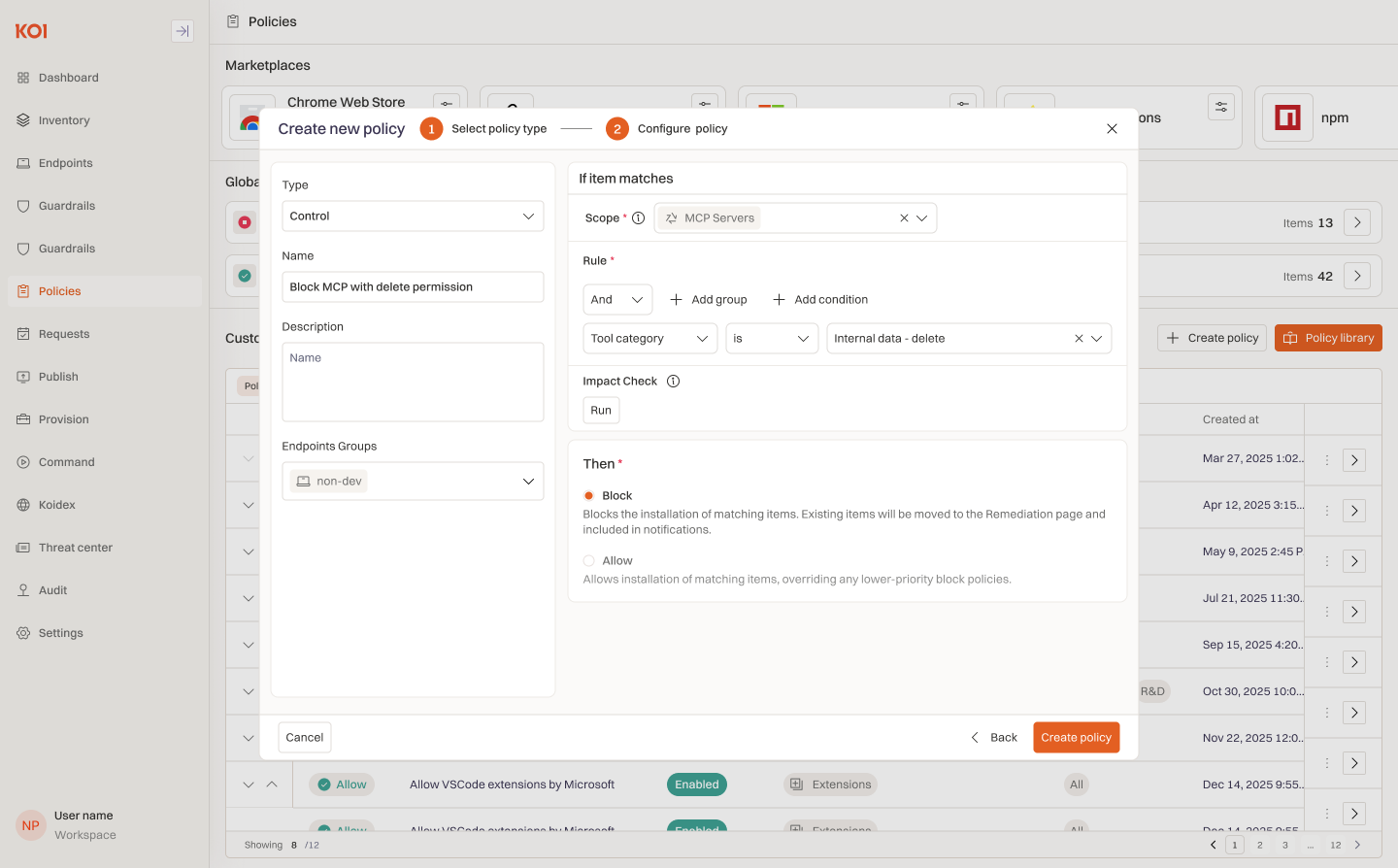

Practice 3: Identify and Restrict High-Risk MCPs & Tools

Addresses: ASI01 (Agent Goal Hijack), ASI02 (Tool Misuse & Exploitation)

Agent manipulation occurs when attackers inject instructions or corrupt tool interfaces to make agents behave maliciously while appearing to function normally - through indirect prompt injection, tool poisoning, or tool shadowing. Not all MCPs carry the same risk: those that ingest external content are prime injection vectors, and tools that send, delete, or execute can cause serious damage.The goal isn't to block all high-risk tools - it's to control them, while ensuring high user productivity. Identify what's being used, decide what to enable and for whom, and ensure high-impact tools come from sources you trust. The key is identifying these high-risk components and restricting access before an attack happens.

Real-world incidents:

- Amazon Q Supply Chain Compromise - A poisoned PR injected instructions directing the AI coding assistant to delete AWS resources via legitimate CLI tools. The flags --trust-all-tools --no-interactive bypassed all confirmation prompts.

Practical mitigations:

- Gate MCPs that pass external content to agent context without input validation.

- Flag high-risk tools - those that send, delete, execute, or can be invoked without authentication.

- Enforce explicit user approval before execution of high-risk tools.

- Regulate rather than block - allow high-risk tools from trusted vendors, scoped by user group and governance policies.

How Koi helps:

- Identifies MCPs prone to indirect prompt injection via security findings and risk assessment.

- Visibility into tool usage, classified by impact and capabilities - see what's being used, understand the risk, and decide what to control.

- Policies scoped by user or device group - enable teams to adopt AI tools safely without increasing organizational exposure.

- Enforces hard stops on unauthorized tool use.

Practice 4: Enforce Least Privilege

Addresses: ASI03 (Identity & Privilege Abuse), ASI05 (Unexpected Code Execution), ASI09 (Human Trust Exploitation)

Agents don't need access to every tool. An email summarizer shouldn't have send/delete permissions. A coding assistant shouldn't have full AWS credentials. When agents inherit excessive privileges or can execute arbitrary code, the blast radius of any compromise expands dramatically. And when compromised agents mislead the humans who trust them, technical failures become business failures.

Real-world incident:

- PromptJacking: Claude Desktop RCEs - Unrestricted AppleScript execution in Anthropic's connectors allowed attackers to trigger command injection via web search content. Allowlisting permitted commands would have blocked the attack.

Practical mitigations:

- Identify and block toxic tool combinations that create exfiltration paths (e.g., database read + external network access).

- Control what code agents can execute - define allowlists for permitted commands and block dangerous operations (e.g., rm -rf, curl to external endpoints, privilege escalation).

- Validate inputs before command execution - block chained command patterns (e.g., &&).

How Koi helps:

- Visibility into agent activity across IDEs and AI assistants - monitor tool invocations and enforce policies on risky tool combinations.

- Intercepts and inspects commands before execution, catching injection patterns.

- Policy-based blocking of tool categories (e.g., dynamic code execution), scoped by user group.

Practice 5: Monitor Agent Behavior and Respond Fast

Addresses: ASI06 (Memory & Context Poisoning), ASI08 (Cascading Failures), ASI10 (Rogue Agents)

Some threats can't be prevented at the gate. Memory poisoning corrupts an agent's long-term memory, causing consistently flawed decisions over time - effectively turning the agent rogue. Whether an agent has been poisoned or deviated from its intended behavior, the symptoms are similar: anomalous actions, unexpected tool invocations, decisions that don't align with its purpose. When this happens in interconnected systems, a single compromise can cascade rapidly. Detection requires monitoring for behavioral drift; response requires shutting it down fast.

Practical mitigations:

- Segment sensitive MCPs from general-purpose agents to minimize impact if compromise occurs.

- Isolate agents in production with strict input/output validation.

- Log agent tool invocations to detect unexpected patterns or deviation from intended behavior.

- Maintain a kill switch to instantly disable compromised agents or MCPs across all endpoints.

How Koi helps:

- Visibility into sensitive MCPs and agentic workflows with risky tool combinations.

- Policy enforcement to isolate agents from high-risk tools and limit cross-system access.

- Centralized kill switch to disable compromised agents or MCPs across your environment.

OWASP Top 10 Frameworks Help Organizations Get Protected

OWASP frameworks matter because they turn a fast-moving threat space into a shared checklist that security and engineering can actually align on. For agentic AI, that checklist is only useful if you can enforce it where the risk enters: the agent hosts, IDE extensions, MCP servers, plugins, packages, and models that teams pull from public registries and install across endpoints.

Koi makes the OWASP Agentic Top 10 actionable by tying those categories to real controls. You can inventory agentic components (including both binary and non-binary software), see what changed between versions, score risk per item, and enforce sourcing and install policies so a single compromised MCP, typosquat, or malicious update does not spread quietly across the fleet. Then, when you need to allow an exception, you can do it deliberately, scoped to the right users and devices, instead of relying on ad hoc installs.

If you want to map the OWASP Agentic Top 10 to your environment and see what you are exposed to today, you can book a demo and we will walk through your current agentic footprint and the specific policies that reduce risk without slowing teams down.

.jpg)

%20(1).png)